Outline

Save yourself some time.

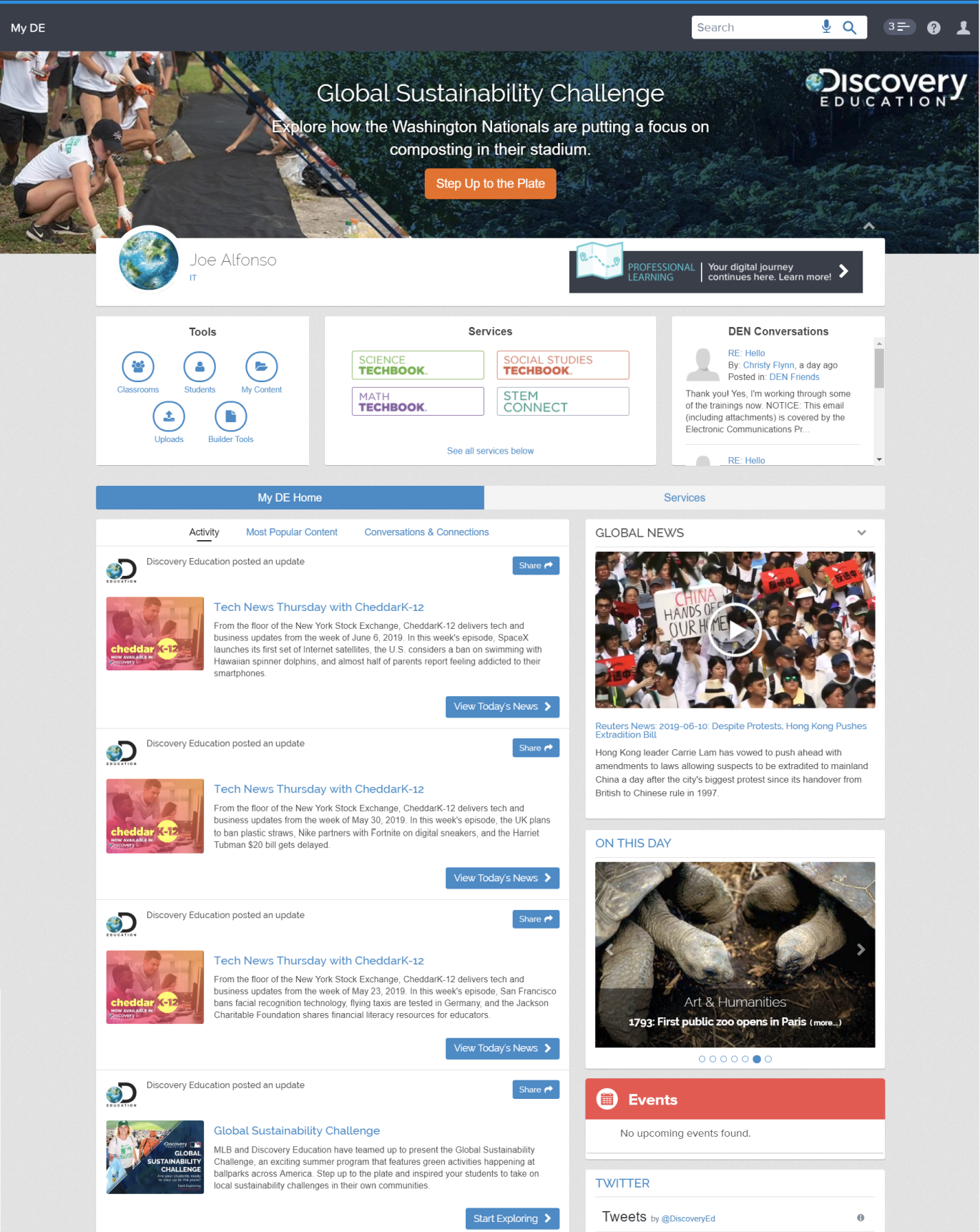

Redesigning the Home experience was the final step in the new Discovery Education Experience. After we understood what and how we were going to change the rest of the site, we could create a homepage that supported, facilitated, and aligned with the rest of the experience.

Disclaimer: Due to personally identifiable information (PII) and various privacy concerns, much of the content has been adjusted, hidden, or removed completely.

Voice control. Try "navigate to [project name]" or "scroll up/down"

Throughout the project I took on and managed a number of roles. Our job was to "get stuff done" and that's what we made sure to do. Resources, timelines, personnel, and so much always changed, and I adapted as needed.

Working across the organization I lead the user experience and design the revised Discovery Education Home Experience. After establishing requirements, use cases, personas, and other preliminary artifacts I then handed the project off for futher iterations while I delegated more time to research and usabaility.

I was in charge of planning and delegating throughout the project. After speaking with our U.K. team we teamed up and leveraged research that they had done. They had also taken into account student designs more heavily, which we then turned into production-ready designs after some review and refinement. Just as important as doing the research was collecting and digesting it in a usable manner so it could be integrated quickly.

Partnered with an excellent developer, we ensured all production designs were AA compliant, appropriate for screen readers, and made sure we design around touch interfaces. We strive to allow every student the ability to have the same experience as the next, regardless of situation. We're legally obligated to be AA compliant, but that's a minimum and we believe our work should result in a higher quality of product.

Working with the research team in the United Kingdom, we combined efforts to test with a larger and more diverse population. While we all generally agreed on the methods used, often times the tools used would vary due to permissions, funding, or process differences. This allowed us the flexibility to exercise new and unique ways to do research, while still retaining the formality of findings. If we needed a card sort and the current active services didn't include that we needed to plan accordingly and reallocate funds. Working with a budget, even if it was fair, and having restrictions forced us to generate creative solutions if we wanted to stick to such an ambitious and active plan.

Identifying, testing, and leveraging modern tools like Figma, Maze.Design, Airtable, Hotjar, Invision, and more, we were able to record information with a level of access not commonly seen within EdTech.

I was able to reduce research costs, research time, and increase not only the quantity but also the quality of findings.

Something that can be limiting when testing in the educational tech industry is a lack of access to users' hardware due to security risks and reasons. Finding new and creative solutions and sometimes even repurposing products that weren't even made for research, has been something that has been made easier by the evolution of web development and technology in general.

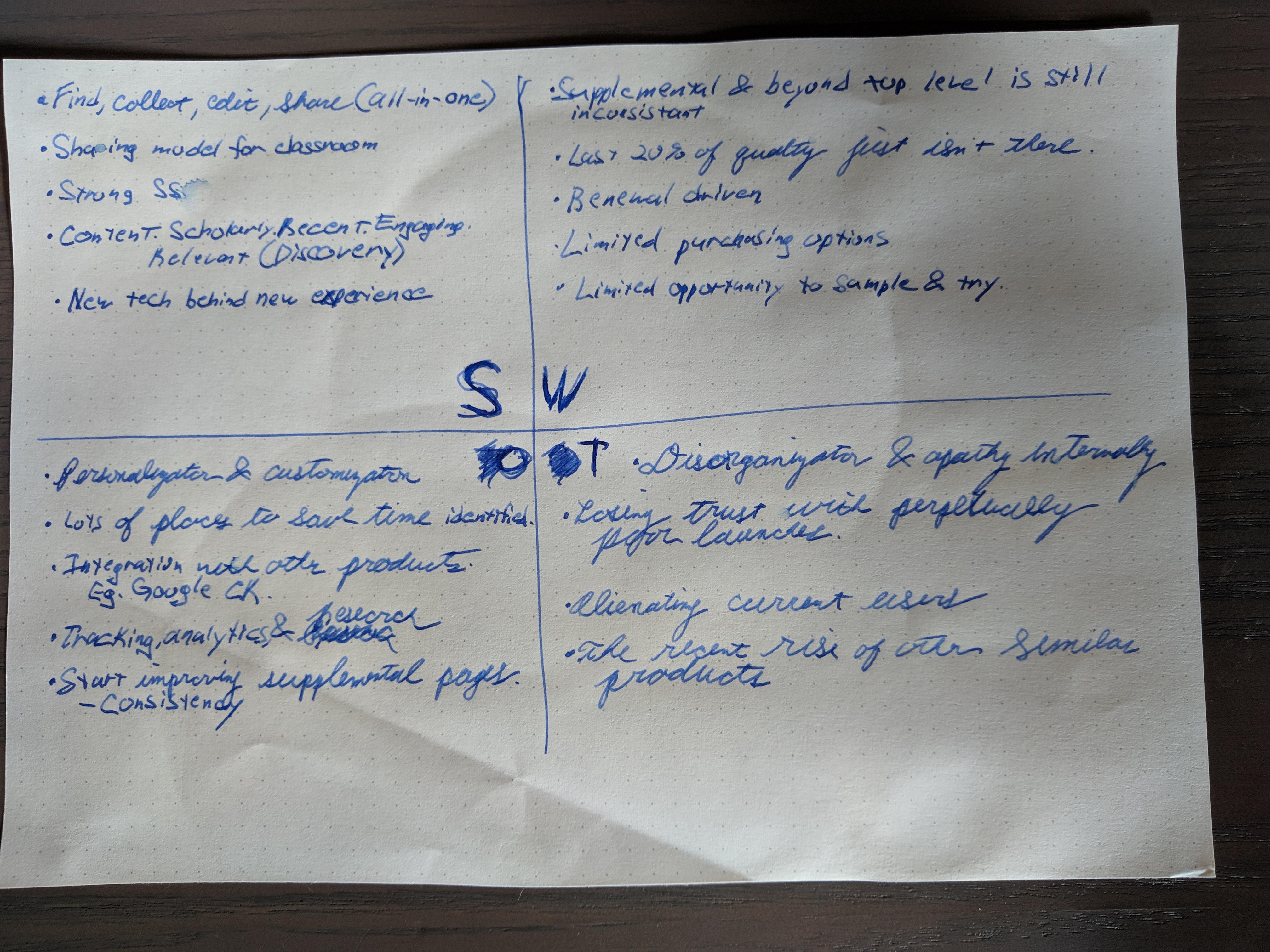

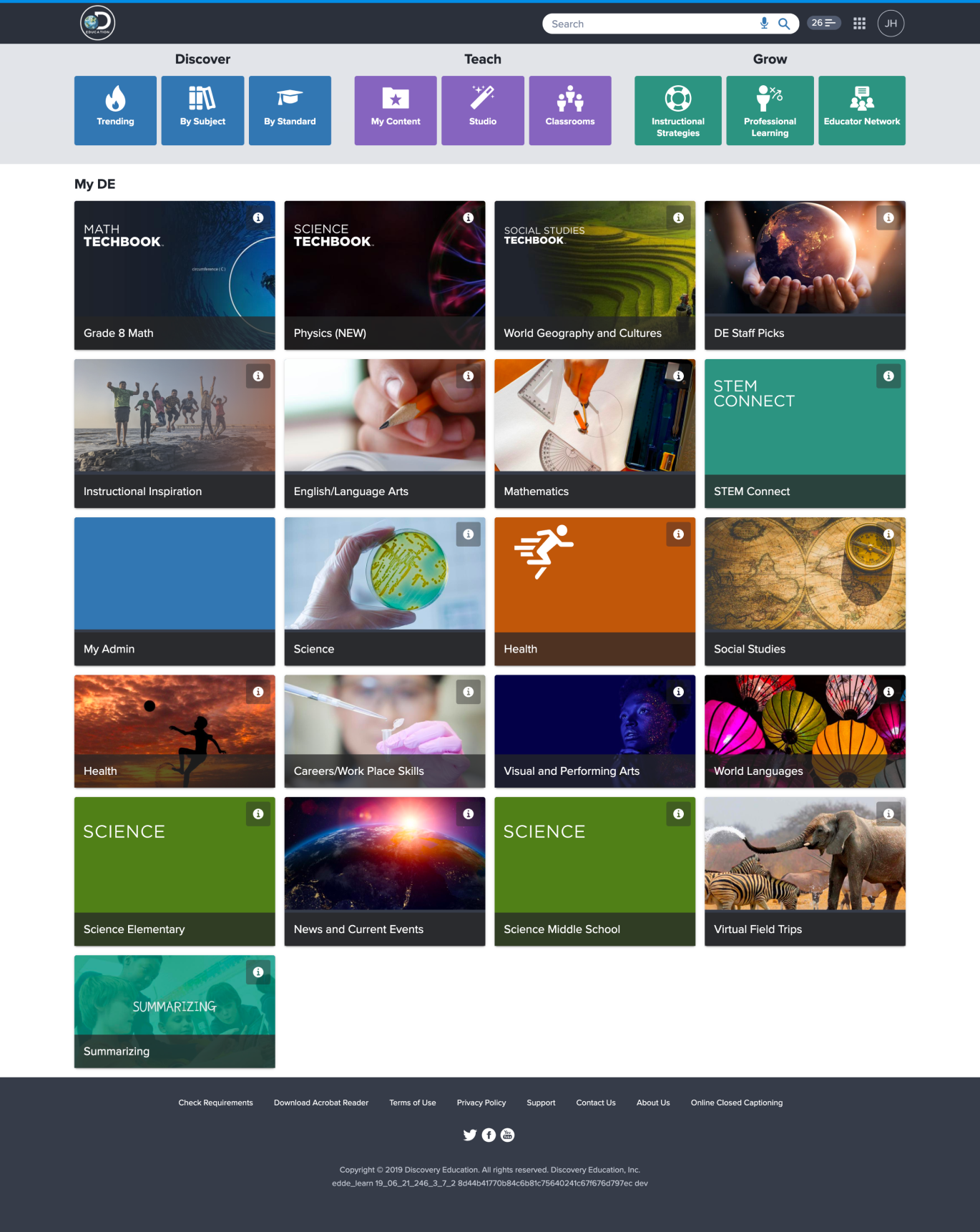

The old home experience had serious usability and engagement issues. By gathering user feedback, analytics, and the current product suite and company transition, we were able to create a new landing experience for post-login users that increased engagement frequency and scope of products.

The first job to be done was obtaining any research and analytics that existed. Using Google Analytics and a newly implemented tool called Pendo (an analytics and tracking tool), we were able to leverage quantatitaive data with qualitative data through usability and satisfaction research. Gathering usage data and funnels were very useful for the data itself and it also enabled us to identify any potential patterns to be aware of when running research sessions.

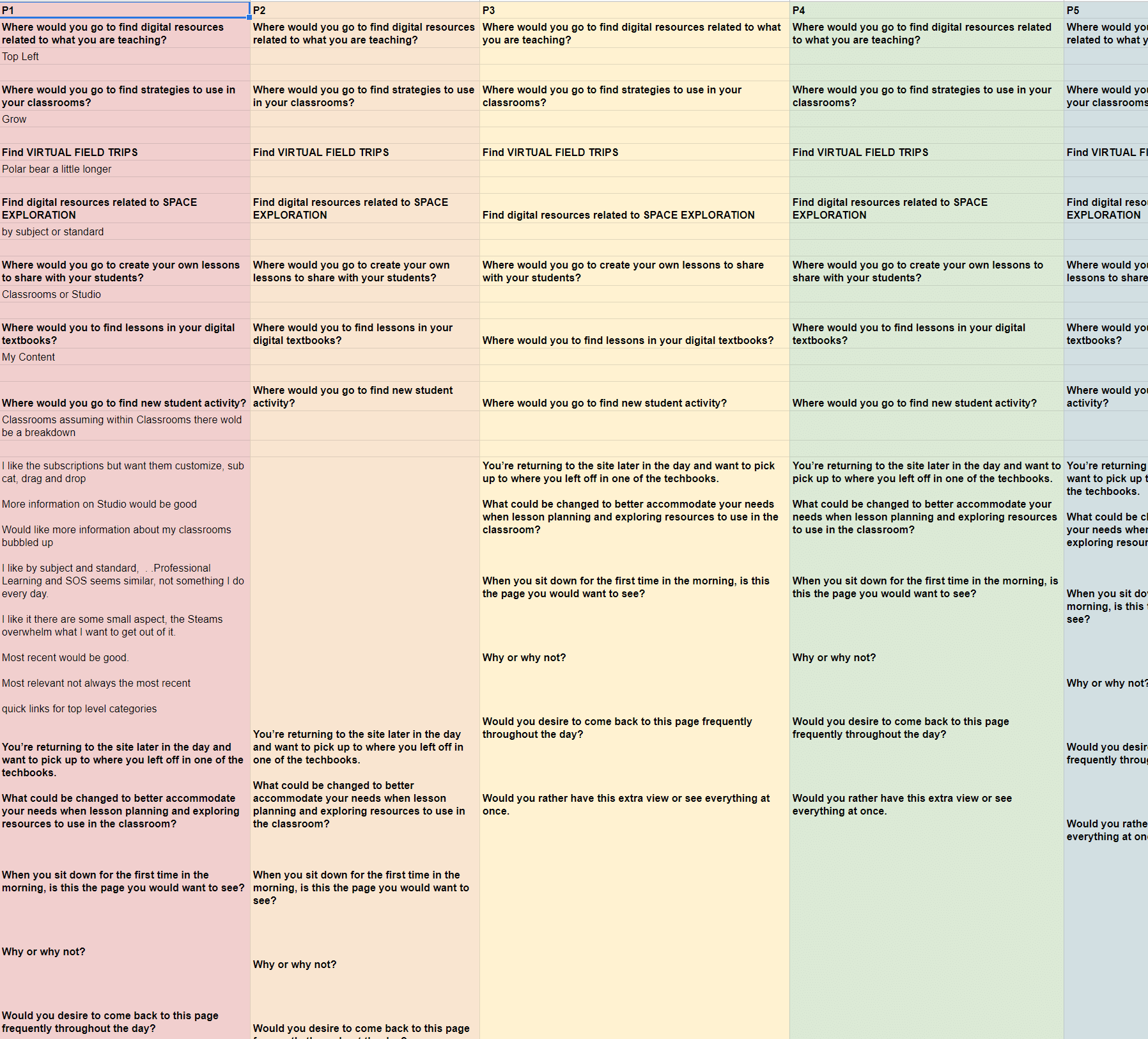

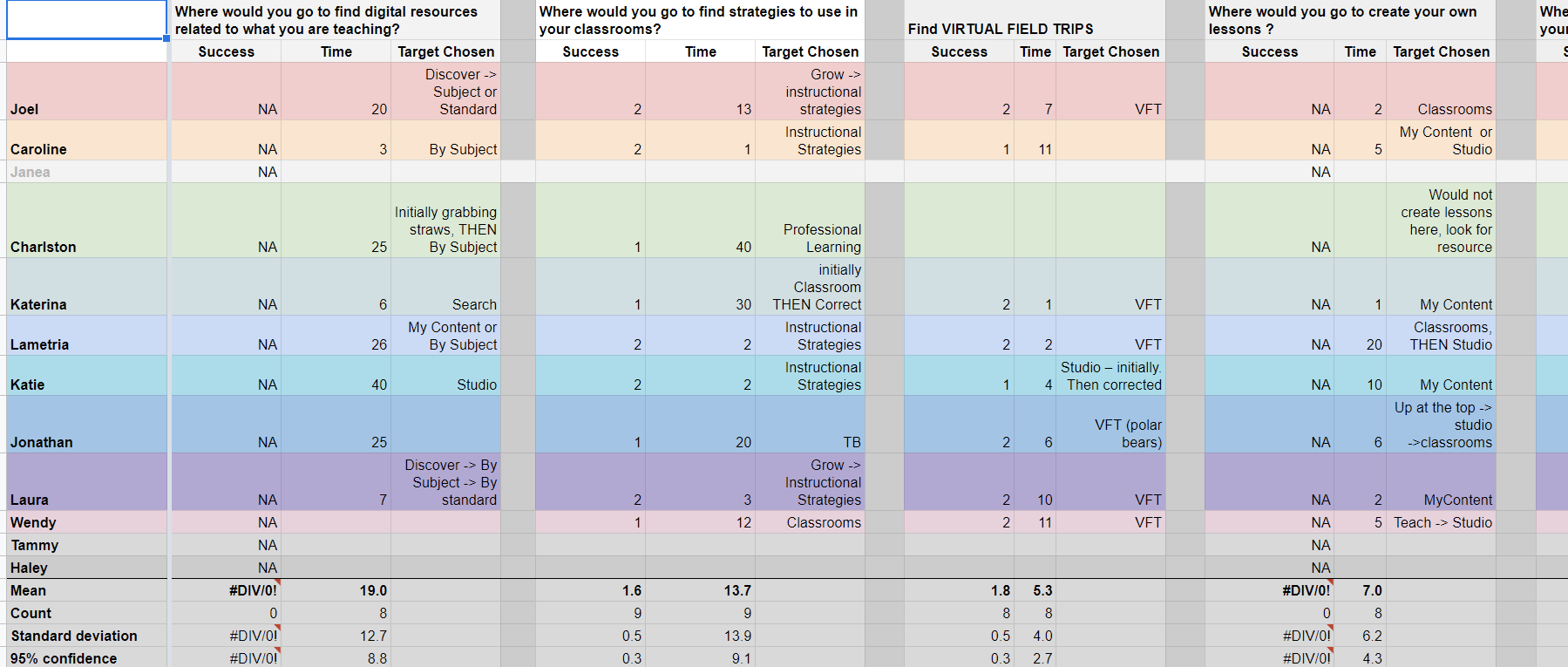

After years of tinkering and in light of recent software developments, I've developed a very cost-effective remote user research solution that we leveraged to get timely, digestable, and actionable data. By having assumptions, analytics, and a general awareness of user concerns, it took less than a week to facilitate and extract findings that were immediately integrated into prototypes and planing. Our primary methods included remote moderated and unmoderated usability testing, 1:1 interviews, and almost always surveys as follow-ups to studies and as their own studies. This combination of generative and evaluative research was critical in discovering and analyzing users' behavior, needs, and motivations.

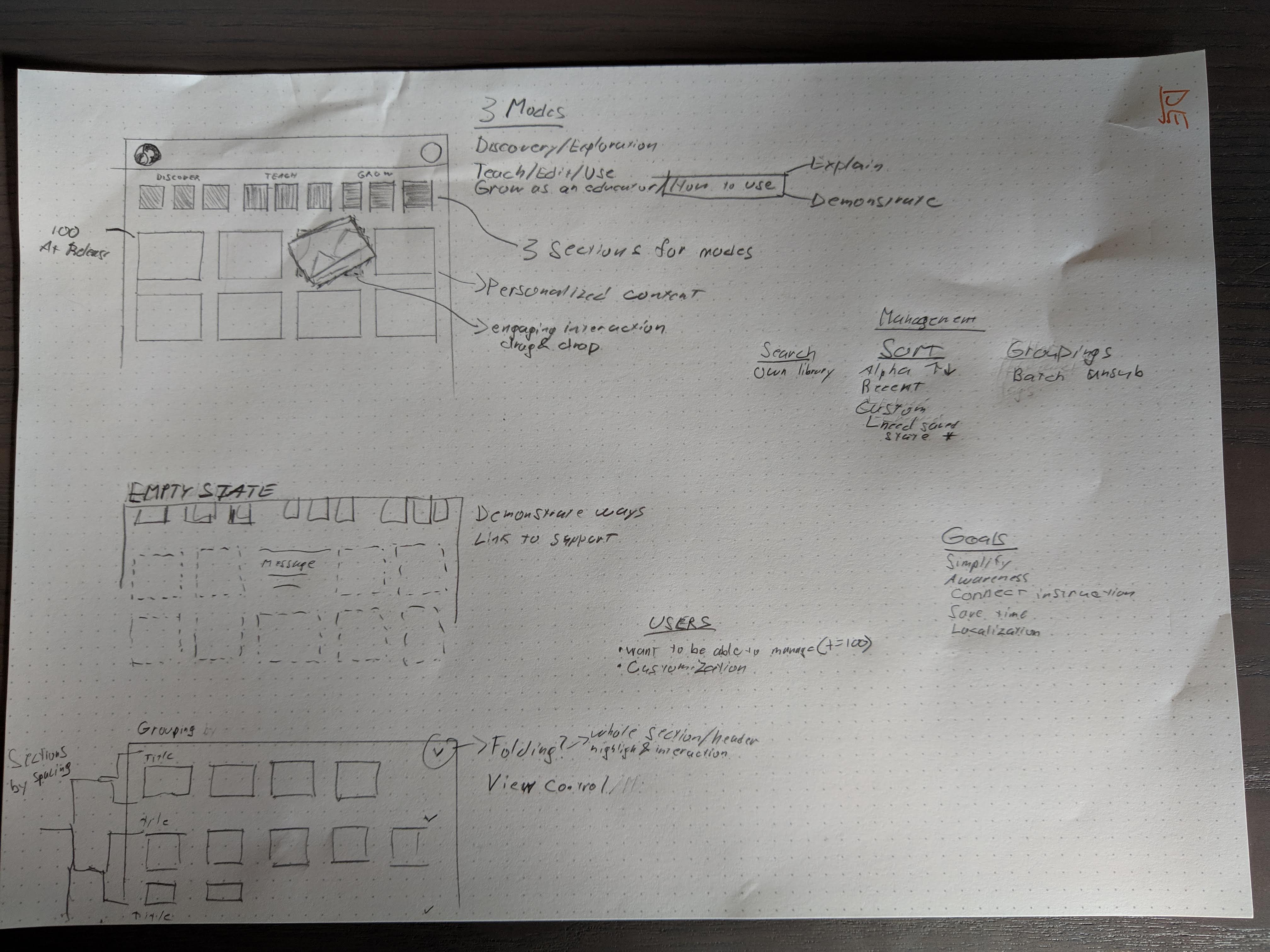

When evaluating the performance of the old home experience we needed two things: 1) to know what would save users time, and 2) we needed a set of goals that could guide the experience and metrics determined to result in a successful product. The following were some of our high-level conclusions that were most relevant to our goals.

Social and activity feeds offered information that was too detailed and needed to be consolidated. Users were also inundated with certain users and content due to a weak sorting and throttling algorithm.

We found that users rarely strayed from certain areas of the site regardless of the content on their homepage. At the same time, there wasn't any way for users to personalize their feeds other than district settings.

When we changed the hero experience engagement spiked and stuck, but overall the experience below the hero area, while also improved, was still performed at extremely low conversion rates.

Analytics showed that often users would use direct bookmarks to certain areas of the site which they found most valuable. This resulted in Home being the least used page of product landing pages and also reduced product diversity each user engaged with.

Prototypes iterations were based off of both research and evaluation of the old homepage, and also had to take into account all of the new experiences and goals. As a result, instead of being fast short iterations, homepage consists of a longer, but less dense timeline staging the final release.

Instead of early quick revisions, the new homepage experience was done over time and changed as the experience itself changed. Home had a separate research track that ran in parallel with others since home was often the step prior to a user engaging with other parts of the site but we weren't finishing it until the rest of the site was done.

It was important to establish goals early in the process and identify guiding principles for research and design so that research was more valuable and to avoid doing unnecessary research that wasted time and money. Having these goals allowed us to create research templates. What this meant is that we could have different people collecting, at different times, but all returning relatively the same type of information. This enabled quicker synthesization and integration of research, and opportunities that were out of scope were easier to identify and delegate.

Some of our findings revealed that by using larger cards with simple but relevant visuals provided a well-balanced and scannable experience. Cards were a familiar pattern throughout the new design system which created a recognizable experience as soon as the user logged in. Strong visuals were important, but only in the short term. Long-term research found that visuals, if done poorly, actually slow users down. It took a great effort by the content team to work with us in order to accomodate meaningful imagery that was an indication of content and not just a design element.

The new experience set out to provide a solution to accomodate all users by leveraging a personalization system, new layout based around teaching and learning modes, and a persistent navigation system that acted as a "mini" version of the homepage.

After months of research, testing, iterating, debate, and last-minute changes because someone noticed something, what we arrived at was something that we can confidently say solves what the modern day classroom teacher desires with the Discovery Education Experience.

Allow users to add and remove content, reorder that content, and group it all in ways that make sense. Allow the users to own the space and really personalize in order to facilitate whichever learning mode they're in.

By providing a Home experience that was anchored around the goals of users and then personalized content, we enabled a much more intentional and useful space. Home and the global navigation should be the hub where teachers can switch modes and pivot on the type of work they're doing. This same base experience can be seen within the global navigation as well, where all nine homepage functions are found.

By leveraging a personalized home experience, we were able to promote high value content that users weren't aware of. This resulted in higher engagement with products along a larger scope as well. In addition, reported satisfaction and perceived lesson planning effectiveness went up as well on surveys.

We wanted to connect users to the high-quality content they expected to find. Discovery Education is known for excellent content. We wanted to increase awareness of changes and updates within Discovery Education resoucres and content library. If new content was added that was relevant to the user, we wanted to increase the chance the user might encounter it. The second thing we wanted to do was notify the user of content within feed Keeping the homepage fresh, but also consistent will never stop being a challenge.

We invested heavily in the fact that users have many modes and we wouldn't be able to predict which mode they'll arrive in. Making sure that users were aware and had quick access to any path was imperative to us. Bouncing and pogo-sticking back to the home page. as in the previous experience, was unacceptable. With the integration of the global navigation, the home experience is with the users throughout. But only what they need, not the content. Just the ability to access the content.

Discovery Education is a global product and we take localization very seriously. We believe that every student and educator should have the same opportunities. With considerations for RTL languages, special character availability in typeface, cultural sensitivities and more, everything from content to the interface goes through a litany of checks and use cases to make sure the experience is as uniform and accommodating as possible.

While we wanted the Home page to tell a story and indicate what the Discovery Experience offers, we also recognized that this isn't a traditional homepage experience. Users are log-ins in with agendas and tasks they need to complete. The homepage needs to be as functional as it is informative.

We emphasized the highest priority and most frequent tasks users visited Discovery Education for and crafted an experience with these tasks holding a large influence in persona and design creation. It was different then all of the rest of the experience, but also remained consistent by utilizing elements from other parts of the experience such as photographic cards.

We carefully empowered users to create and own their Discovery Education experience and collaborated with a number of teams to offer direct support through a number of channels.

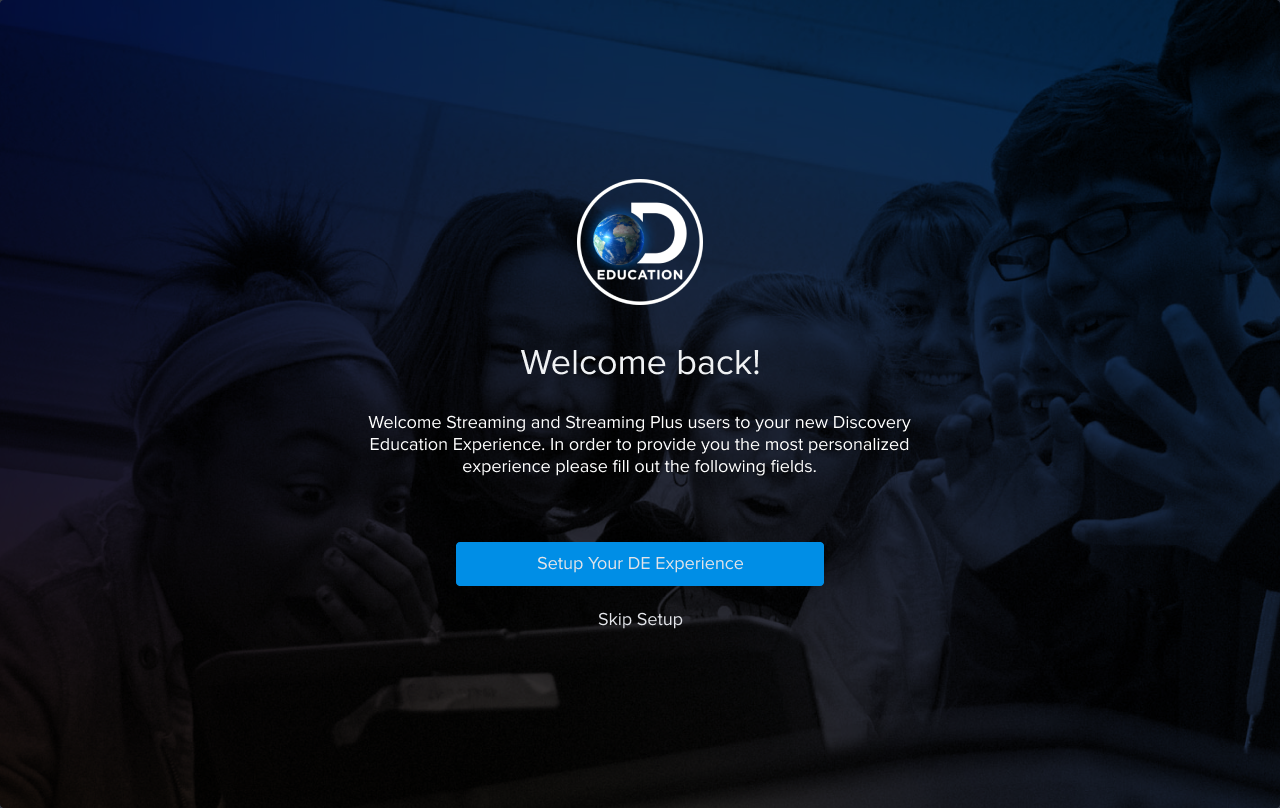

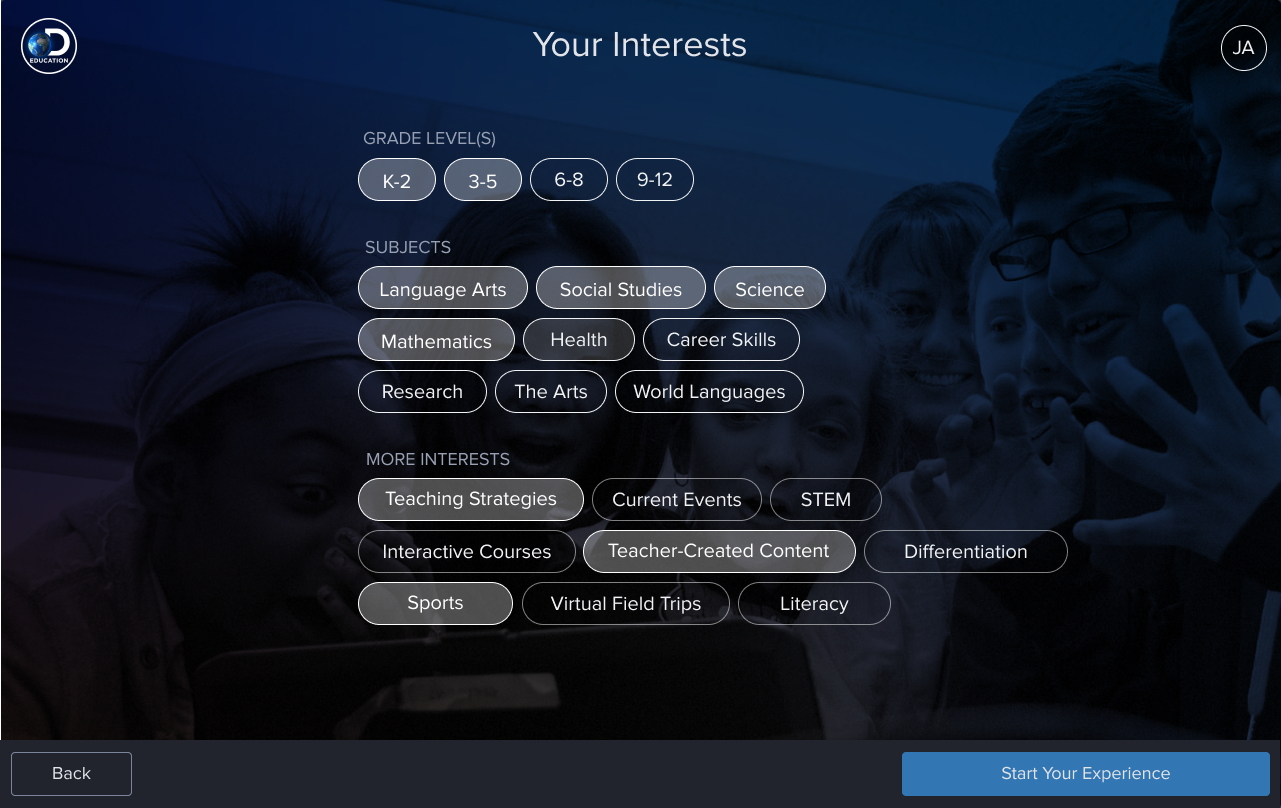

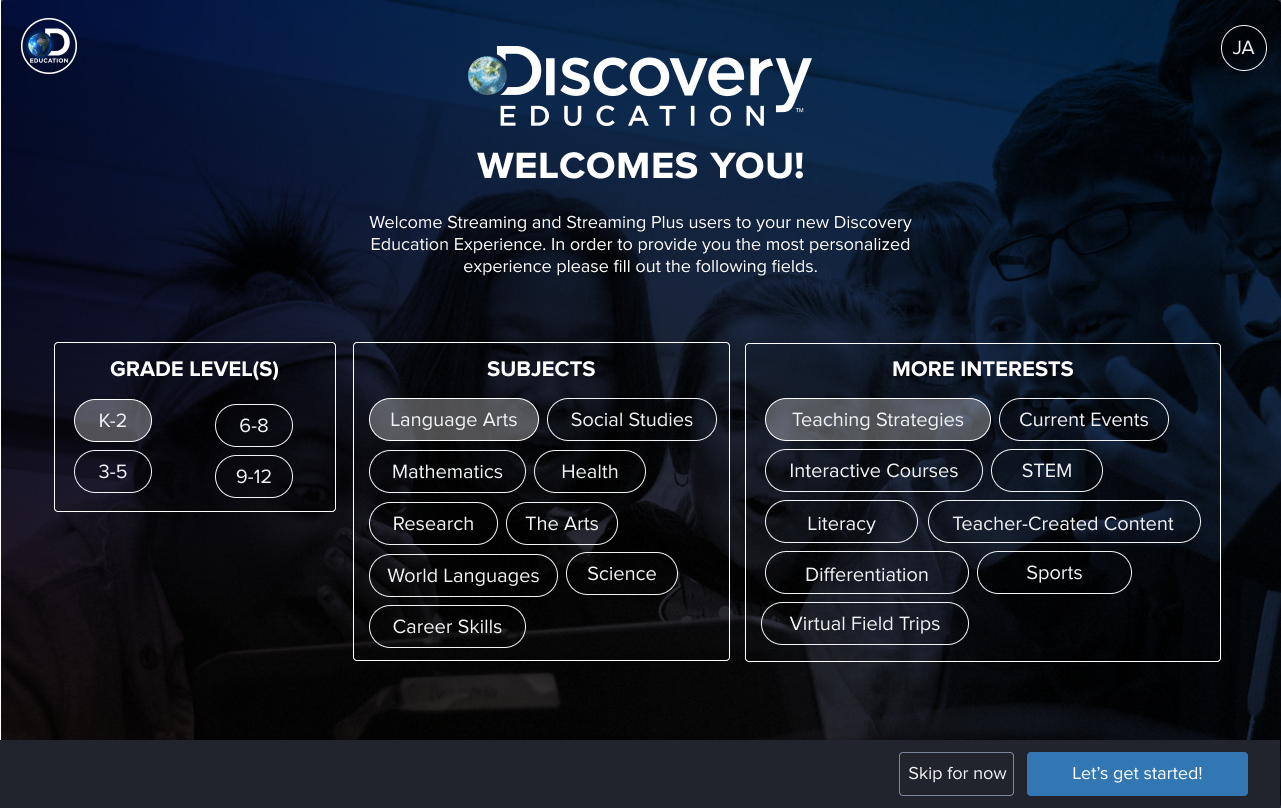

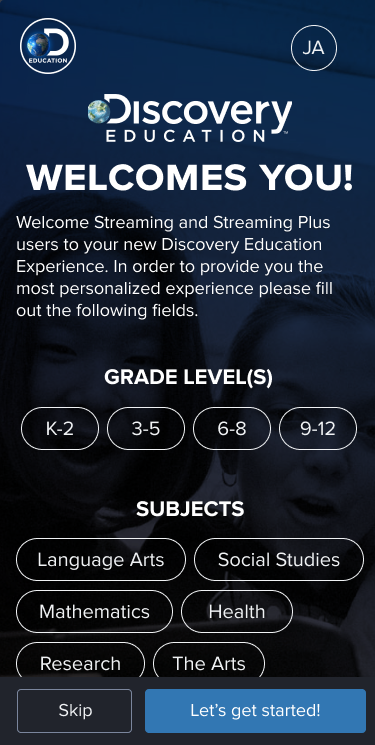

Early on in our soft-launch timeline we identified that we were struggling to get users to complete the initial onboarding screen forms. In order for users to have the best experience, and have the most relevant content served to them, we needed to know more about each user. We also want to remind users about settings they might have overlooked and enable them to make any adjustments to ease their transition to a new product.

What started out as a three-screen experience ended up becoming a single with the future considerations to replace it with a much leaner and less intrusive experience. Initially the intentions were good; allow users the ability to refresh and set up their profiles. Due to a number of reasons we explored with users, the immediate value just wasn't communicated or implemented well enough.

The initial prototype called for three on-boarding screens. With less than stellar performance we reduced the experience to two screens and immediately noticed an improvement.

With performance still below where we wanted it to be, we again reduced the flow to a single screen, and again, noticed an improvement. We saw an especially large increase in mobile users making selections. We discovered that it was easier for users to see that there was additional content and as a result users were more likely to scroll.

We have since concluded that removing the on-boarding experience screen/page and directing the user to their profile is the best option. Adjusting the on-boarding in this way will provide opportunities in other areas as well as being a less intrusive experience overall.

One of the key reasons educators skipped the screens was due to being unaware of significant changes to a familiar experience. This was different than simply something new. users already had mental models and assumptions about their profile and the options they selected. They weren't used to those selections meaning much and continued to assume the same thing.

A critical issue we dealt with was trying to reach educators prior to using the experience. We wanted to establish a voice with users to speak to them and explain exactly why the on-boarding experience was worth their time. The last thing a teacher needs was an additional interruption, never mind one that resulted in an entire experience change. The less prepared and exposed an educator was, the increased likelihood that they would skip the onboarding experience.

The product suite for Discovery Education is already considerable, but the number of provisioned product and services that districts and users can purchase plus the combinations they make are almost endless. This results in certain products looking very similar to other products and sub-products.

Prior to this new experience, products were usually recognized by their text alone. Now, with the design system set for a more engaging experience, we needed to create system solutions that weren't in place before. We needed to work with the content team to obtain and generate images for each product. This required a whole new sub-project for the team to focus on and delegate time towards, but in the end resulted in an experience that was much more scannable and easier to understand.

Reducing the number of screens and effort required to engage with the experience for the first time wasn't just to increase conversions. One of the more consistent pieces of feedback we received was about class interruption for students and teachers. Users generally were only signed in during times they had a task to complete or a specific purpose. Generally, they were signed in to do something and any pauses were seen as an interruption

By reducing the on-boarding experience and working with the Marketing and Community teams much more proactively, we were able to reduce friction, increase engagement with the profile customization, and increase general satisfaction ratings.

One of the major improvements with the new experience was our new in-product messaging, support, and analytics system. We established three goals needed to accomodate for when exploring solutions. Options had to be accessible, contextual, and explorable. The user had to be able to easily access help at any time during the experience. The help they access should be contextual and present information about the current experience initially. Research has shown that users tend to want specific support related to wa current ask over general support. And lastly, if the user wasn't able to find a solution, they should have other options such as related content or a way to directly reach out for support.

Figuring out how we would leverage user data to create better experiences required thorough planning that would influence the entire data schema of each person's profile. We had to account for what was currently available, what we would be collecting, what we COULD be collecting in the distant future, technology limitations, optimizations, and especially security. Security was an additional challenge because at all times we were required to abstract and anonymize each user's information. When we needed to access a user's information, we had to go through additional steps to identify them while retaining anonymity.

A common theme we encountered during research was the fact that most users assumed personalization and customization. Time and time again, when testing experiences, teachers had assumptions about how the experience would be personalized for them.

It wasn't just that users wanted personalization, they assumed and expected it. The fact that this was such a widely observed theme, meant it quickly elevated certain features and experiences that were relevant to personalization and customization.

When the drag and drop interactions were introduced we often found users playing with them on their free time or during research sessions when waiting. This specific little interaction was similar to clicking the top of a pen. A little action that served as a way for users to find comfort and occupy time just because they enjoyed how it felt. In later interactions the drag and drop interaction was improved to raise and skew a little on rotation in order to create a more playful experience and stronger interaction affordance.

With the goal of the experience being so focused on personalization, an interesting challenge presented itself. How close to the real thing do we need to get when testing experiences? In the end it depended on the situation. If we tested something specific to personalization we often had to create a unique experience for each user, which then impacted our organization and workflow system since we then had to account for versions that had not been considered previously.

If we were testing something that wasn't directly related to personalization then it would depend on how much the experience was influenced. We would leverage one of our personas that aligned with a majority of teachers so that content would at least be within their subject and therefore still accurate enough to suspend a bit of disbelief during testing.

At the time of writing we were in the analysis and monitor phase of the product cycle. We set up satisfaction surveys, product and event monitoring, and feedback collection teams that were responsible for reporting every quarter. Different people on different teams at different levels all want different goals. I'm working with the appropriate people to help guide them to the most valuable metrics and performance indicators based on company goals and philosophies.